Introduction

As AI adoption accelerates, enterprise architects face new challenges in managing interactions with Large Language Models (LLMs). The Model Context Protocol (MCP) emerges as a vital framework designed to streamline how context is handled within LLM systems, improving consistency and control.

MCP has become crucial for organizations seeking to enhance operational efficiency and improve user experiences. It emerges as a significant framework that facilitates this communication by providing a structured environment for context management. This article delves into the key features, benefits, and applications of the Model Context Protocol.

What is Model Context Protocol (MCP)?

The Model Context Protocol is a standardized approach that defines how context data is structured, shared, and managed across LLM-based applications. By establishing clear protocols, MCP enables more predictable model behavior and efficient handling of complex inputs. It is designed to manage and convey contextual information between different systems, it refers to the settings that surround an event or an interaction, including user preferences, environmental factors, and historical interactions. By using MCP, systems can share and interpret context effectively, enhancing their ability to respond dynamically to user needs.

Key Features of the Model Context Protocol

1. Standardization: MCP establishes a common framework for contextual data, which helps ensure interoperability between disparate systems. This standardization reduces misunderstandings and enhances data exchange efficiency.

2. Scalability: The protocol is designed to be scalable, accommodating a wide variety of applications across different industries—from smart homes to healthcare systems—allowing organizations to expand their services without major overhauls.

3. Real-time Data Handling: With real-time data processing capabilities, MCP allows systems to react immediately to changes in context. This is vital in scenarios where timely responses can significantly impact user satisfaction and system performance.

4. Versatility: MCP is adaptable to different technologies and formats, making it suitable for integration with existing systems. Whether for web applications, mobile apps, or IoT devices, MCP can be utilized to enhance contextual communication.

Benefits of Implementing the Model Context Protocol

Improved User Experience: By enabling systems to understand and adapt to user context, MCP significantly enhances user experience. For example, an application can provide personalized recommendations based on previous interactions and current preferences.

Increased Efficiency: By sharing contextual information, systems can streamline processes and reduce redundancy. For instance, in a smart home, different devices can communicate through MCP to optimize energy usage based on occupancy and preferences.

Enhanced Decision-Making: Organizations can leverage the insights gained through contextual data to make informed decisions, improving overall business strategies and operational practices.

Facilitated Collaboration: MCP allows for better collaboration between applications and services, which can lead to more integrated solutions that meet complex user needs.

Applications of the Model Context Protocol

1. Smart Homes: In smart home systems, MCP can enable devices such as lights, thermostats, and security systems to communicate effectively. For example, when a user leaves the house, the system can adjust heating and turn off lights automatically based on past behavior.

2. Healthcare: In healthcare settings, MCP can enhance patient monitoring systems by sharing real-time data about patient conditions, enabling healthcare providers to respond rapidly and effectively.

3. E-commerce: Online retailers can utilize MCP to analyze customer behaviors and preferences, delivering personalized shopping experiences that can drive sales and customer loyalty.

4. Smart Cities: In urban planning and management, MCP can help various infrastructures communicate, optimizing traffic flow, enhancing public safety, and improving resource allocation.

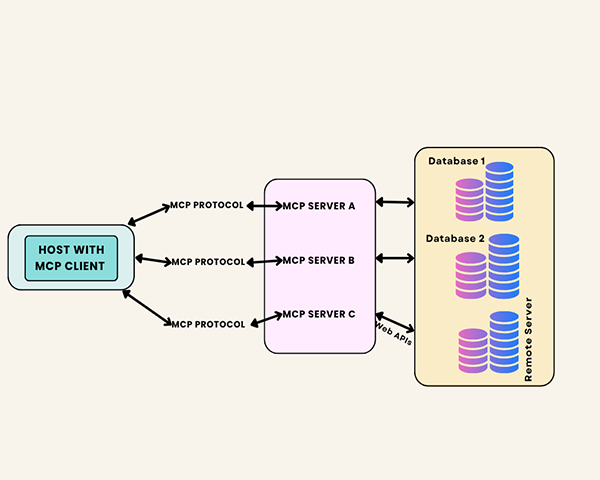

How MCP Works in LLM Systems

MCP operates by formalizing the flow and format of contextual information passed to the model. It governs the interaction between user prompts, system instructions, and auxiliary data, ensuring the LLM interprets context accurately. This management enhances performance in tasks requiring multi-turn conversations or domain-specific knowledge.

MCP vs. System Prompts

Unlike traditional system prompts that inject instructions in an ad hoc manner, MCP introduces a modular and explicit framework for context handling. This reduces ambiguity, improves reusability, and supports enterprise-grade AI deployments by allowing better auditability and governance.

Future Directions in Context Management

Looking ahead, MCP can evolve to support dynamic context adaptation, cross-model interoperability, and integration with AI governance standards. These enhancements will empower architects to design AI systems that are robust, compliant, and scalable in enterprise environments.

Conclusion

Incorporating the Model Context Protocol into enterprise AI architectures advances LLM context management, enabling more reliable and controlled AI interactions. For architects, understanding MCP is essential to mastering prompt engineering and innovating in AI system design.